SK Hynix HBM3E is officially mass-produced and global supply starts

SK Hynix plans to begin supplying global customers later this month.

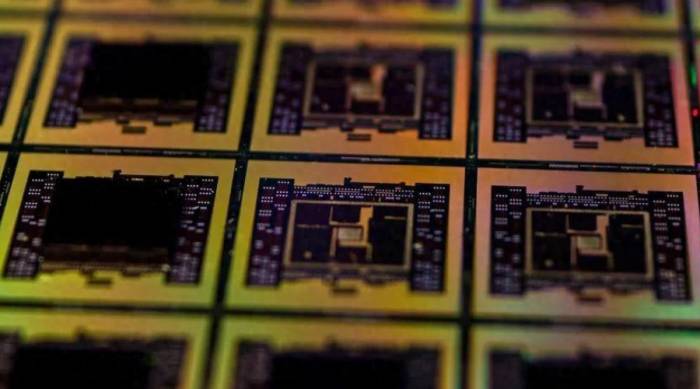

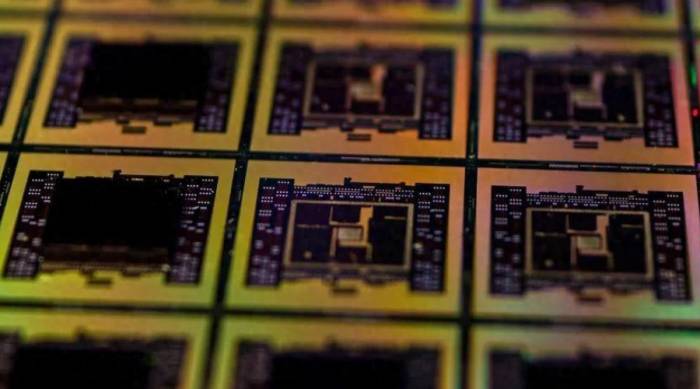

HBM (High Bandwidth Memory), directly translated as high bandwidth memory, is a new type of CPU/GPU memory chip. Since its inception in January 2022, HBM3, as the latest generation of high bandwidth memory, has quickly become a leader in the field of high-performance computing with its unique 2.5D/3D memory architecture. Carrying forward the excellent genes of its predecessors, HBM3 also features a wide 1024-bit data path, operating at a speed of 6.4 Gb/s, providing an astonishing bandwidth of up to 819 Gb/s.

Now, SK Hynix has raised the bar again with the introduction of HBM3E. This new type of memory not only inherits all the advantages of HBM3 but also increases the data rate to 9.6 Gb/s, providing unprecedented performance support for advanced AI workloads. With its exceptional bandwidth, large capacity, and compact footprint, HBM3E is gradually becoming the preferred memory solution in modern AI computing.

SK Hynix recently announced that its latest ultra-high-performance AI memory product, HBM3E, has officially entered mass production and plans to begin supplying global customers later this month. This significant milestone comes just 7 months after the company announced the development of the product last August, demonstrating SK Hynix's strong capabilities and efficient execution in the semiconductor technology field.

Advertisement

As the first supplier to adopt high-performance DRAM chips for HBM3E, SK Hynix continues its successful track record following the introduction of HBM3. The company anticipates that the successful mass production of HBM3E, coupled with its pioneering role in providing HBM3, will solidify its position as a leader in the AI memory field.

In today's AI-driven environment, rapid data processing is imperative, and the composition of semiconductor packaging plays a crucial role. Global major technology companies have increasingly demanding requirements for AI semiconductor performance, and SK Hynix positions its HBM3E as the best solution to meet these escalating demands.

HBM3E, as the industry's most important artificial intelligence memory product, excels in all fundamental parameters, including speed and thermal management. With a data processing capacity of up to 1.18 TB per second, equivalent to processing over 230 full HD movies in one second, it sets a new benchmark for efficiency and performance.

Furthermore, considering the inherent high-speed operation of AI memory, effective thermal control is crucial. SK Hynix's HBM3E benefits from the integration of advanced MR-MUF (Massive Reflow Mold Underfill) process, which improves its thermal performance by 10% compared to its predecessor.

MR-MUF is a key aspect of SK Hynix's advanced technology, involving the stacking of semiconductor chips and injecting liquid protective material between them to enhance thermal dissipation and ensure circuit protection. Compared to traditional methods, this innovative approach significantly improves thermal efficiency and contributes to the stable mass production within the HBM ecosystem.SK Hynix HBM Business Head Sungsoo Ryu is confident in the company's comprehensive AI memory solution lineup following the mass production completion of HBM3E.

SK Hynix's HBM capacity is approximately 120K, but the capacity may vary depending on the verification progress and customer orders.

TrendForce indicates that, in terms of the current mainstream HBM3 product market share, SK Hynix holds over 90% of the HBM3 market, with Samsung expected to follow closely, as AMD MI300 is gradually released in the coming quarters.

According to TrendForce's data, Samsung's total HBM production capacity is projected to reach around 130K by the end of the year (including TSV).

HBM Application Prospects

With the rise of new technologies such as AI large models and intelligent driving, the demand for high-bandwidth memory is increasing.

The demand for AI servers will grow rapidly. AI servers can process large amounts of data in a short time, and GPUs can enhance data processing volume and transfer rates, thus requiring higher bandwidth, making HBM a standard configuration for AI servers.

Automotive is also an application area worthy of attention for HBM. The number of cameras that require fast transmission of large amounts of data and information processing is continuously increasing, and HBM has a significant bandwidth advantage.

AR and VR are also future focus points for HBM. These systems require high-resolution displays and more bandwidth to transmit data. At the same time, real-time processing of large amounts of data also requires the strong bandwidth support of HBM.

In addition, the demand for smartphones, tablets, gaming consoles, and wearable devices is also growing. These devices require more advanced memory solutions to meet the increasing computational demands, so HBM is also expected to grow in these areas. Furthermore, the emergence of new technologies such as 5G and the Internet of Things has further driven the demand for HBM.With the rapid development of AI and ML technologies, the demand for data processing speed is becoming increasingly urgent. SK Hynix, with its innovative HBM3E technology, is leading the industry trend at an unprecedented speed and is expected to completely change the landscape of the AI computing field. As this technology is widely applied, the memory system of artificial intelligence will be reshaped, and it will drive significant changes in the entire field.

SK Hynix plans to begin supplying global customers later this month.

HBM (High Bandwidth Memory), directly translated as high bandwidth memory, is a new type of CPU/GPU memory chip. Since its inception in January 2022, HBM3, as the latest generation of high bandwidth memory, has quickly become a leader in the field of high-performance computing with its unique 2.5D/3D memory architecture. Carrying forward the excellent genes of its predecessors, HBM3 also features a wide 1024-bit data path, operating at a speed of 6.4 Gb/s, providing an astonishing bandwidth of up to 819 Gb/s.

Now, SK Hynix has raised the bar again with the introduction of HBM3E. This new type of memory not only inherits all the advantages of HBM3 but also increases the data rate to 9.6 Gb/s, providing unprecedented performance support for advanced AI workloads. With its exceptional bandwidth, large capacity, and compact footprint, HBM3E is gradually becoming the preferred memory solution in modern AI computing.

SK Hynix recently announced that its latest ultra-high-performance AI memory product, HBM3E, has officially entered mass production and plans to begin supplying global customers later this month. This significant milestone comes just 7 months after the company announced the development of the product last August, demonstrating SK Hynix's strong capabilities and efficient execution in the semiconductor technology field.

Advertisement

As the first supplier to adopt high-performance DRAM chips for HBM3E, SK Hynix continues its successful track record following the introduction of HBM3. The company anticipates that the successful mass production of HBM3E, coupled with its pioneering role in providing HBM3, will solidify its position as a leader in the AI memory field.

In today's AI-driven environment, rapid data processing is imperative, and the composition of semiconductor packaging plays a crucial role. Global major technology companies have increasingly demanding requirements for AI semiconductor performance, and SK Hynix positions its HBM3E as the best solution to meet these escalating demands.

HBM3E, as the industry's most important artificial intelligence memory product, excels in all fundamental parameters, including speed and thermal management. With a data processing capacity of up to 1.18 TB per second, equivalent to processing over 230 full HD movies in one second, it sets a new benchmark for efficiency and performance.

Furthermore, considering the inherent high-speed operation of AI memory, effective thermal control is crucial. SK Hynix's HBM3E benefits from the integration of advanced MR-MUF (Massive Reflow Mold Underfill) process, which improves its thermal performance by 10% compared to its predecessor.

MR-MUF is a key aspect of SK Hynix's advanced technology, involving the stacking of semiconductor chips and injecting liquid protective material between them to enhance thermal dissipation and ensure circuit protection. Compared to traditional methods, this innovative approach significantly improves thermal efficiency and contributes to the stable mass production within the HBM ecosystem.SK Hynix HBM Business Head Sungsoo Ryu is confident in the company's comprehensive AI memory solution lineup following the mass production completion of HBM3E.

SK Hynix's HBM capacity is approximately 120K, but the capacity may vary depending on the verification progress and customer orders.

TrendForce indicates that, in terms of the current mainstream HBM3 product market share, SK Hynix holds over 90% of the HBM3 market, with Samsung expected to follow closely, as AMD MI300 is gradually released in the coming quarters.

According to TrendForce's data, Samsung's total HBM production capacity is projected to reach around 130K by the end of the year (including TSV).

HBM Application Prospects

With the rise of new technologies such as AI large models and intelligent driving, the demand for high-bandwidth memory is increasing.

The demand for AI servers will grow rapidly. AI servers can process large amounts of data in a short time, and GPUs can enhance data processing volume and transfer rates, thus requiring higher bandwidth, making HBM a standard configuration for AI servers.

Automotive is also an application area worthy of attention for HBM. The number of cameras that require fast transmission of large amounts of data and information processing is continuously increasing, and HBM has a significant bandwidth advantage.

AR and VR are also future focus points for HBM. These systems require high-resolution displays and more bandwidth to transmit data. At the same time, real-time processing of large amounts of data also requires the strong bandwidth support of HBM.

In addition, the demand for smartphones, tablets, gaming consoles, and wearable devices is also growing. These devices require more advanced memory solutions to meet the increasing computational demands, so HBM is also expected to grow in these areas. Furthermore, the emergence of new technologies such as 5G and the Internet of Things has further driven the demand for HBM.With the rapid development of AI and ML technologies, the demand for data processing speed is becoming increasingly urgent. SK Hynix, with its innovative HBM3E technology, is leading the industry trend at an unprecedented speed and is expected to completely change the landscape of the AI computing field. As this technology is widely applied, the memory system of artificial intelligence will be reshaped, and it will drive significant changes in the entire field.